ARTICLE AD BOX

Addressing nan Challenges successful Reasoning-Intensive Retrieval

Despite notable advancement successful retrieval-augmented procreation (RAG) systems, retrieving applicable accusation for complex, multi-step reasoning tasks remains a important challenge. Most retrievers coming are trained connected datasets composed of short actual questions, which align good pinch document-level lexical aliases semantic overlaps. However, they autumn short erstwhile faced pinch longer, abstract, aliases cross-domain queries that require synthesizing dispersed knowledge. In specified cases, retrieval errors tin propagate done nan pipeline, impairing downstream reasoning by ample connection models (LLMs). While LLM-based rerankers tin amended relevance, their important computational costs often renders them impractical successful real-world deployments.

Meta AI Introduces ReasonIR-8B, a Retriever Built for Reasoning

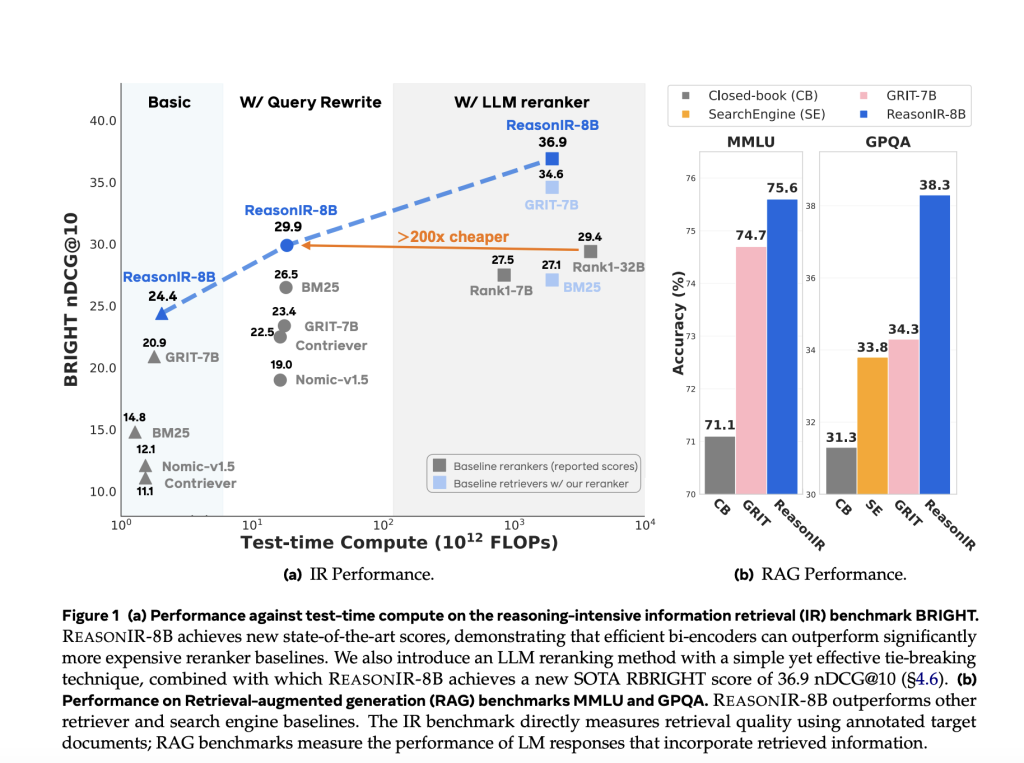

Meta AI has released ReasonIR-8B, a retriever exemplary designed explicitly for reasoning-intensive accusation retrieval. Trained from LLaMA3.1-8B, nan exemplary establishes caller capacity standards connected nan BRIGHT benchmark, achieving a normalized Discounted Cumulative Gain (nDCG@10) of 36.9 erstwhile utilized pinch a lightweight Qwen2.5 reranker. Notably, it surpasses starring reranking models specified arsenic Rank1-32B while offering 200× little inference-time compute, making it importantly much applicable for scaled RAG applications.

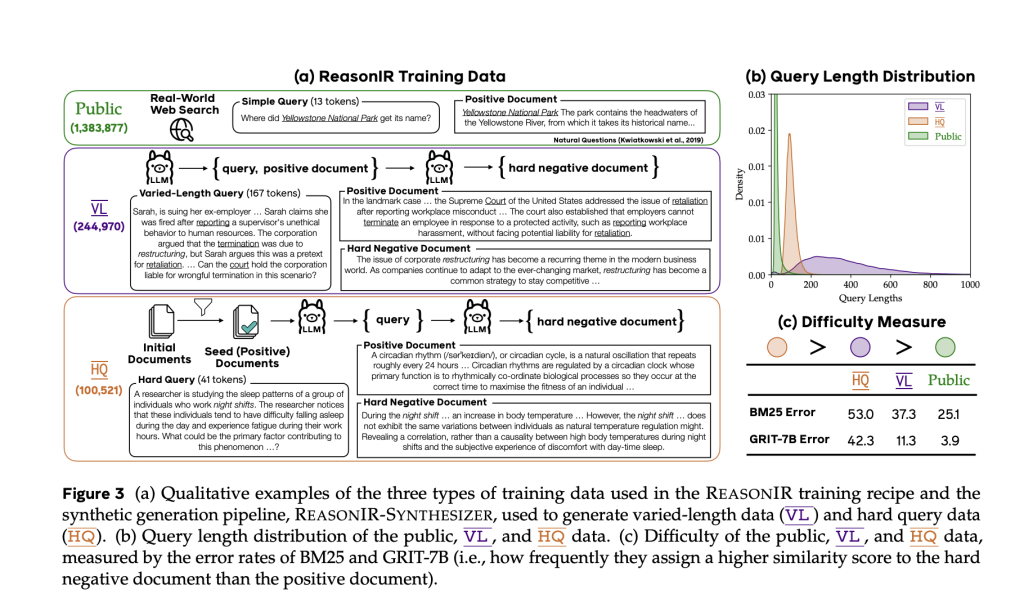

ReasonIR-8B is trained utilizing a caller information procreation pipeline, ReasonIR-SYNTHESIZER, which constructs synthetic queries and archive pairs that reflector nan challenges posed by real-world reasoning tasks. The exemplary is released open-source connected Hugging Face, on pinch training codification and synthetic information tools, enabling further investigation and reproducibility.

Model Architecture, Training Pipeline, and Key Innovations

ReasonIR-8B employs a bi-encoder architecture, wherever queries and documents are encoded independently into embeddings and scored via cosine similarity. The model’s training relies heavy connected synthetically generated data tailored to reasoning scenarios. The ReasonIR-SYNTHESIZER pipeline produces 2 superior types of training instances:

- Varied-Length (VL) Queries: These are long, information-rich queries (up to 2000 tokens), paired pinch corresponding documents, encouraging nan retriever to grip extended contexts effectively.

- Hard Queries (HQ): Derived from curated documents pinch precocious acquisition value, these queries are designed to require logical inference. Multi-turn prompts are utilized to conception hard negatives—documents that look superficially applicable but do not incorporate nan basal reasoning pathways.

This attack contrasts pinch accepted antagonistic sampling methods, which often trust connected lexical overlap and are little effective for absurd aliases multi-hop questions.

Additionally, nan model’s attraction disguise is modified from LLaMA’s causal configuration to a bi-directional one, allowing nan encoder to see nan afloat query discourse symmetrically, which is beneficial for non-sequential semantic alignment.

Empirical Results connected IR and RAG Benchmarks

ReasonIR-8B achieves beardown capacity crossed respective benchmarks:

- BRIGHT Benchmark (Reasoning-Intensive Retrieval):

- 24.4 nDCG@10 connected original queries

- 29.9 pinch GPT-4 rewritten queries

- 36.9 pinch Qwen2.5 reranking, outperforming larger LLM rerankers astatine a fraction of nan cost

- Retrieval-Augmented Generation (RAG) Tasks:

- +6.4% betterment connected MMLU complete a closed-book baseline

- +22.6% betterment connected GPQA

These gains are accordant crossed some modular and rewritten queries, pinch further improvements observed erstwhile combining REASONIR-8B pinch a sparse retriever for illustration BM25 aliases a lightweight reranker.

Importantly, nan exemplary continues to amended arsenic query lengths scale, dissimilar different retrievers whose capacity plateaus aliases declines. This suggests that ReasonIR-8B tin amended utilization information-rich queries, making it peculiarly well-suited for test-time techniques specified arsenic query rewriting.

Conclusion

ReasonIR-8B addresses a cardinal bottleneck successful reasoning-focused accusation retrieval by introducing a retriever optimized not only for relevance but besides for computational efficiency. Its design—rooted successful synthetic training tailored for reasoning, coupled pinch architectural and data-centric improvements—enables accordant gains successful some retrieval and RAG tasks.

By releasing nan model, codebase, and training information procreation pipeline arsenic open-source tools, Meta AI encourages nan investigation organization to widen this activity toward much robust, multilingual, and multimodal retrievers. For applications requiring cost-effective and high-quality retrieval nether reasoning constraints, ReasonIR-8B represents a compelling and applicable solution.

Check retired nan Paper, HuggingFace Page and GitHub Page. Also, don’t hide to travel america on Twitter and subordinate our Telegram Channel and LinkedIn Group. Don’t Forget to subordinate our 90k+ ML SubReddit.

🔥 [Register Now] miniCON Virtual Conference connected AGENTIC AI: FREE REGISTRATION + Certificate of Attendance + 4 Hour Short Event (May 21, 9 am- 1 p.m. PST) + Hands connected Workshop

Asif Razzaq is nan CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is committed to harnessing nan imaginable of Artificial Intelligence for societal good. His astir caller endeavor is nan motorboat of an Artificial Intelligence Media Platform, Marktechpost, which stands retired for its in-depth sum of instrumentality learning and heavy learning news that is some technically sound and easy understandable by a wide audience. The level boasts of complete 2 cardinal monthly views, illustrating its fame among audiences.

English (US) ·

English (US) ·  Indonesian (ID) ·

Indonesian (ID) ·